Engineering at MindLink

Parallelizing our CI tests

July 05, 2018

In this second “improving our CI tests” blog we’ll complete our journey by:

- Enabling parallel NUnit test execution at the text-fixture granularity

- Fixing some issues in our CI tests stack that prevented parallelisation

- Reducing our CI test run time by a further 10%

Background

The MindLink .NET CI/test stack looks like:

- TFS 2018

- NUnit

- Moq

See the previous blog post about how we enabled test project-level parallelism and upgraded to NUnit 3.10.

Parallelising Take 2

NUnit 3 now supports running individual tests in parallel. This is at a different level of granularity to the project-level scope supported by the external test runners. We can choose which groups of tests are able to run in parallel by attaching [Parallelizable] attributes at the correct level and scope.

This has some interesting implications for your tests. With project-level granularity, your tests still run in the same environment as they would do otherwise - basically just entire isolated environments running in parallel.

Now, with test-level granularity your tests need to make sure that they can run whilst other tests are running. This of course should be the case for correctly designed Unit and in-memory component tests (and correctly designed code under test).

In our previous post, project-level parallelism gave us a 2x speed-up (on a 2-processor build server). In theory, turning on test-level parallelisation isn’t going to give us any greater speed up - there are still 2 cores to perform the synchronously-running tests, regardless of who and how is scheduling them.

However, we decided to look into this anyway because:

- Unless the project-level distribution of the tests is exactly equal across test projects, there will always be the case where there’s one test project left running after all the others have completed. At this stage, we will benefit from test-level parallelisation.

- Developers running the tests individually from Resharper/Visual Studio will benefit from the test-level parallelisation.

- We should be able to turn on parallelisation - if our tests are written correctly - so it’s an interesting exercise to try.

Turning on Parrallelisation

At this point in the blog I have typed the word “parallel” so many times I’m forgetting how to spell it.

We need to decide at what level we can enable parallelisation. We have a couple of options, based on where we apply the [Parallelizable] attribute, and what scope we define.

- Per-test - all tests can run parallel with each other

- Per-test-fixture - all test fixtures can run parallel with each other, but tests inside run sequentially

- Per-namespace - tests in different namespaces can be run in parallel, but tests inside the same namespace run sequentially

The answer to the above depends on how you’ve written your tests. I think we’re in the same boat as most NUnit teams in that our test fixtures have state that is reset in each [SetUp] method (i.e. we have private fields in each test fixture class). On the up-side, each test depends on that state and that state alone.

[TestFixture]

public sealed class MyTestFixture

{

private Mock<IMyDependentInterface> mockMyDependentInterface;

private MyClass myClass;

[SetUp]

public void SetUp()

{

this.mockMyDependentInterface = new Mock<IMyDependentInterface>();

this.myClass = new MyClass(this.mockMyDependentInterface.Object);

}

[Test]

public void DoingSomethingWhenSomethingDoesSomething()

{

this.mockMyDependentInterface.Setup(...);

Assert.That(

this.myClass.DoSomething(),

Is.EqualTo(Expected));

}

}I should point out that the goal with this work is to enable parallelization:

- With the minimum amount of work/changes/risk possible.

- Zero changes to the actual code (i.e. outside of the tests).

If we can’t achieve parallelization without either of the above being true, then we’ll abandon the exercise.

This leads me to the conclusion that we can run all test-fixtures in parallel, but tests inside each fixture must be run in sequence. This isn’t to say we can’t change individual test fixtures - or write new fixtures - to support per-test parallelization in future.

So to do this, I apply the [Parallelizable] to each of our test projects, at the assembly level. The Scope parameter is instructing that each test-fixture can be run in parallel, but no two tests inside each fixture can run at the same time.

[assembly: Parallelizable(ParallelScope.Fixtures)]

And low-and-behold, when I run chunks of test fixtures in Resharper, I can actually see multiple fixtures running in parallel.

So with that, I push and that’s it!

Problems

Except it never is.

Problem Number 1 - Static Variables

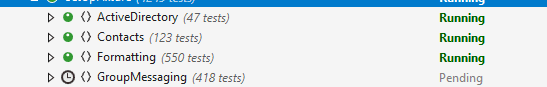

The first thing that happens is 7 tests in the same namespace fail with the same error:

I then come to the realisation: The tests that have failed are testing what can perhaps be described as not the most up-to-date part of our codebase. In fact, there’s a particular set of static variables that the code under test relies apon.

In real life, these variables get initialized when the application starts up. In the test world, each test fixture initializes and tears down these static variables - and worse, each test fixture initializes the variables with slightly different values.

Because the fixtures are now running concurrently, they’re overwriting the variables and it’s causing havoc with the code under test, and the mocked dependencies.

I have a couple of options to fix this - re-write the code under test to avoid the static variables, or fix the way the variables are initialized during the test runs. Removing the static variables breaks our rule that we shouldn’t be making any changes to the actual code. We’ll do this eventually at some point no doubt, but for now the least risk is to lift the initialization of these variables out of the test fixtures themselves.

NUnit has a [OneTimeSetUp] attribute that denotes a method used to initialize test-fixtures across a whole namespace. We already use this mechanism to alleviate the boilerplate set up of some our “cross-cutting” concerns, like logging. Introducing a new method just for this troublesome namespace allows me to lift the initialization of these static variables into one shared place.

[OneTimeSetUp]

public override void TestFixtureSetUp

{

base.TestFixtureSetUp();

ChannelService.ChatConnectionChatRoomPrefix = DummyChatConnectionChatRoomPrefix;

ChannelService.ChatConnectionUserPrefix = DummyChatConnectionUserPrefix;

}I also remove the equivalent code from each of the offending child fixtures, and update the tests/mocks to understand these new constant values. If anything, this does more closely mirror how the code runs in real life.

Problem Number 2 - Cross-Cutting Dependencies

So I push the previous fix and all of a sudden more problems start occurring.

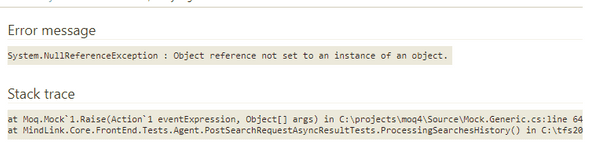

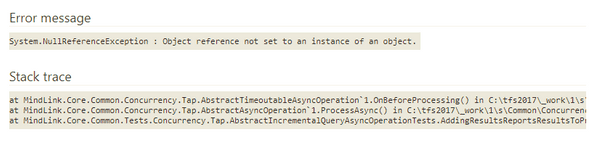

In particular, loads of errors like the below:

I come to the second realisation - we have cross-cutting behaviour that’s being mocked out individually in each test fixture. The test fixtures are again overwriting each other’s attempts at this global mocking.

Just to take a step back for a second:

- Our code models certain cross-cutting concerns via a “service locator” pattern

- The “service locator” is a global static instance. The locator’s implementation can vary, but it’s initialized when the application starts and you just always presume it’s there, and that you can fetch various services from it. So for example I can retrieve an ICatService implementation by going

var myCatService = ServiceLocator.Instance.GetService<ICatService>() - This is about as awkward as it sounds. We have concrete dependencies on the ServiceLocator.Instance. We have no real control over which instances end up with references to which service implementation.

- We’ve generally removed this pattern, but it is still used in a number of places for obtaining access to our

ISchedulerServiceinstance. This is pretty much used by anything that wants to schedule a callback - immediately on the threadpool, or as a timed callback etc.

So we need to make sure this mechanism works when running our tests. This means in each test setup we have to:

- Set the

ServiceLocator.Instanceguy as a stubbedIServiceLocatorimplementation that’ll let us register aISchedulerServiceinstance for retrieval by the code under test - Register a mock

ISchedulerServicewith the stub service locator. We typically set up theISchedulerService’s mocked behaviour as part of this (for instance, simulating the callback). The code under test will then retrieve this instance from the service locator instance.

You can probably see where the problem is - test fixtures are competing for the mocking of the service locator instance.

Unfortunately this pattern is in a lot of places in the code, and it’s just not feasible to refactor away from this pattern in one fell swoop.

To fix this, we somehow need to mock a static instance, but keep how we’ve mocked that instance unique in the context of each parallely running test fixture. This sounds like something thready - but it’s worse than that: we use async/await a lot in the code, so tests don’t even necessarily run on their own thread. Rather, they run in their own async execution context.

At this point I give up and go to the pub.

About 3 pints in and it dawns on me.

The first observation is that I need to stop each test-fixture overwriting the ServiceLocator.Instance singleton. This means we need to initialize this guy (with something) once per test project.

Now - what do we set the global instance as? My second observation is that whatever it is, I need a way for each test fixture to register their own ISchedulerService instance with it, and for their code-under-test to see the same instance. So there needs to be a logical affinity between the test-code registering the service instance, and the code-under-test fetching the service instance. And this affinity needs to flow across async control flow.

System.Threading.AsyncLocal<T> to the rescue!

So I come up with this as my new global test fixture setup:

public static readonly AsyncLocal<ISchedulerService> SchedulerServiceAsyncLocal = new AsyncLocal<ISchedulerService>();

[OneTimeSetUp]

public virtual void TestFixtureSetUp()

{

var mockServiceLocator = new Mock<IServiceLocator>();

mockServiceLocator.Setup(sl => sl.GetObjectOfType<ISchedulerService>())

.Returns(() => SchedulerServiceAsyncLocal.Value);

ServiceLocator.Instance = mockServiceLocator.Object;

}To clarify:

- I’ve created a global

AsyncLocalobject that all the test fixtures can see and set their ownISchedulerServiceinstance on. - I’ve created a mocked

ServiceLocatorobject using Moq that will serve requests forISchedulerServiceinstances by fetching the current value from theAsyncLocal. This mockServiceLocatoris initialized once per test project and shared across all concurrently running test fixtures.

Each test in a test fixture then registers its mock instance a la:

var mockSchedulerService = new Mock<ISchedulerService>();

SetUpFixture.SchedulerServiceAsyncLocal.Value = mockSchedulerService.Object;This means that:

- Each test can fiddle about with their own ISchedulerService mock instance, isolated from tests in other fixtures

- All code under test (running in the same async execution flow) will get the same instance as the corresponding set up code registered.

So we have the exact same static-mocking behaviour as before, but our test fixtures can run in parallel!

I’m actually strangely pleased with myself that I came up with this

Concluding

So anyway, after that change all the tests started reliably passing. I don’t think the problems we found were actually too bad on a codebase of our size. Work to resolve the static references in the older bits of our codebase continues.

And now our build timings:

- Unit tests - 1:44 (this actually isn’t any faster than our result from the first blog post, as predicted. Though this is a couple of weeks later, and we do have a few more tests now)

- Behaviour tests - 1:36 (trimmed a minute off here - there’s more of an uneven distribution of tests between projects in this step)

And running from Resharper is now faster than lightning!

Written by Ben Osborne.

Craft beer and cats.